"opportunities for improvement"<

/em> exist in our databases, then we might as well be blind folded and throwing darts. To arrive at articulate problem statements and precisely defined "opportunities for improvement", one must complete statement workload analysis.

I will illustrate this process by way of an example workload and completed analysis. I often teach this concept in presentations with an interactive animated PowerPoint slide that you can view by clicking this link (Right Click and choose Save As...).

Here are some example statements that are blasting through your database at a high rate of speed:

<

table border=1 cellpadding=2 cellspacing=2>

Statement

Number |

Statement

Text |

CPU Sec |

Sort ms |

Exec sec |

| 1 |

Select C1, C2 from TB where C3 = '100' |

.1 |

0 |

.15 |

| 2 |

Select C1, C2 from TB where C3 = '200' |

.1 |

0 |

.15 |

| 3 |

Select C1, C2 from TB where C3 = '300' |

.1 |

0 |

.15 |

| 4 |

Select C1, C2 from TB where C3 = '400' |

.1 |

0 |

.15 |

| 5 |

Select C1, C2 from TB where C4 > 1000 |

.3 |

0 |

.50 |

| 6 |

Select C1, C2 from TB where C4 > 9000 |

.3 |

0 |

.50 |

| 7 |

Select C1, C2 from TB where C3 = '500' |

.1 |

0 |

.15 |

| 8 |

Select C1, C2 from TB where C3 = '600' |

.1 |

0 |

.15 |

| 9 |

Select C5, C6 from TB where C8 > 5000 order by C5 |

.5 |

500 |

.70 |

| 10 |

Select C1, C2 from TB where C3 = '700' |

.1 |

0 |

.15 |

| 11 |

Select C1, C2 from TB where C3 = '800' |

.1 |

0 |

.15 |

| 12 |

Select C1, C2 from TB where C3 = '900' |

.1 |

0 |

.15 |

| 13 |

Select C1, C2 from TB where C3 = '910' |

.1 |

0 |

.15 |

To aggregate costs around unique statement structures or patterns, one must remove any extra white space from the statements and replace literal values with token place holders. For each matching statement pattern, the costs attributable to that statement pattern should be summed. After completing this statement workload analysis process, we can see the total number of executions of each statement pattern, and the total costs for CPU, Sort, and Elapsed time (other cost metrics such as Rows Read, Rows Written, Logical Reads, and others can be summed as well). What's more, all of the totals should be added together to obtain the total cost of the entire workload. With the total cost of the entire workload in hand, the individual statement totals can be expressed as a relative percentage of the entire workload.

Using the sample statements above as input, the completed statement workload analysis looks like this:

Execution

Count |

Statement

Pattern |

CPU Sec |

CPU Pct |

Sort ms |

Sort Pct |

Exec sec |

Exec Pct |

| 10 |

Select C1, C2 from TB where C3 = :ls |

1.0 |

47.6% |

0 |

0% |

1.5 |

46.9% |

| 2 |

Select C1, C2 from TB where C4 > :ln |

.6 |

28.6% |

0 |

0% |

1.0 |

31.2% |

| 1 |

Select C5, C6 from TB where C8 > :ln order by C5 |

.5 |

23.8% |

500 |

100% |

.70 |

21.9% |

| 13 |

TOTALS |

2.1 |

100% |

500 |

100% |

3.2 |

100% |

This illustration, of course, is extremely simple. With some unintuitive irony, the statement having the lowest average CPU consumption per execution (.1 Sec) has the highest aggregate CPU cost. Your actual workloads will be much more complex.

OLTP Databases

Statement workloads in OLTP databases are highly repetitive. Analyzing as little as 15 to 60 minutes of data often provides invaluable cost information. Over a one hour time period, most OLTP databases execute thousands of individual statements, but often just a few hundred unique statement patterns.

Over the years, and as recently as last week, I have seen class students, clients, and customers experience remarkably well stated problems like these:

- A SELECT statement accessing a table with only 32 rows consumes 34% of the CPU time on an SMP 4-way host

- A SELECT statement that consumes 97% of the CPU time and 90% of the elapsed time on an SMP 8-way host

- Two SELECT statements using over 60% of CPU time and 65% of the elapsed time on an X/86 dual core Linux host

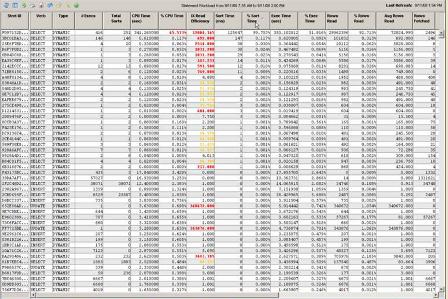

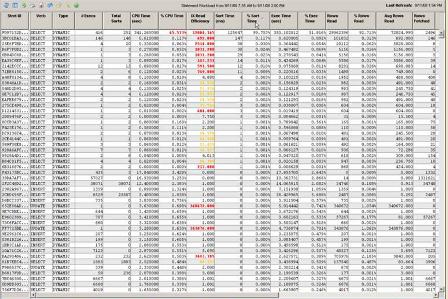

- And, just last week, a SELECT statement consuming 66% of the CPU time, 99.8% of the Sort Time, and 51.8% of the Elapsed Execution time. The statement read 92.7% of all total Rows Read by the database with Average Rows Read per execution of 72,025 rows. The Index Read Efficiency was 12,004. Remarkably, the average response time for this statement was .84 seconds. A sample analysis is shown below.

- Click image to see a larger image in a new window -

These findings are not uncommon. Mosquito swarms lurk in nearly every database on the planet. Even brand name applications like SAP, PeopleSoft, Siebel, Content Manager, JD Edwards, and many more are plagued with mosquito infestations that are quietly sucking the life out of your CPUs and degrading your business productivity and profitability.

Mosquito Infestations are Invisible to the Human Eye

Mosquito infestations exist because they cannot be detected by any other method or means. Most (good) DBAs diligently EXPLAIN each statement BEFORE they go into production, but the EXPLAINS of mosquito statements (like the problems shown above) all showed very low timeron costs. EXPLAINS do not consider frequency of execution.

Let us be clear on this point:

- Total Cost = Individual Execution Cost X Frequency.

Have you ever wondered why statement Frequency is an input parameter to the IBM DB2 Design Advisor? Now you know.

Data Warehouse Databases

For data warehouse databases, repetitious patterns of statement activity emerge over extended time periods such as a week or month. Decision Support Analysts tend to have their favorite queries and reports that they will run daily, weekly, or monthly. When you complete statement workload analysis over an extended period of time, it will become quite evident which periodic queries will benefit from physical design tuning. True, you may observe 1000's of one-off statements, but you will find statements with multiple executions that have relatively high aggregate costs. When you successfully tune the statements having frequency greater than 7 over a 7 day period, you will measurably reduce processing costs and improve the performance of the Data Warehouse for all users.

I recently worked with another company having a Data Warehouse database. We looked at all cost aggregated SQL executed over an eight day period. We passed the top few hundred statements having the highest frequency of aggregated execution to the IBM Design Advisor as a single workload. The Design Advisor evaluated over 50,000 physical design solutions and provided us with several high quality MDC table and index recommendations.

Getting it Done

If you have access to LOTS of inexpensive labor, you can attempt this workload analysis method by hand. I know of a team of two DBAs who spent two weeks analyzing one hour of collected statement data by hand with an Excel spreadsheet. After 160 hours of labor, they found one statement using about 25% of the CPU time in the database. After spending another 40 hours on The DNA Test of Performance Accountability, they further determined that 90% of the transaction elapsed time was OUTSIDE the database. Imagine that - after 3 weeks of blood, sweat, and tears of manual boring labor, the company concluded the performance issue wasn't in the database. Can your organization tolerate poor performance for three weeks?

Hopefully it is self-evident and apparent to you that some kind of tool, or automated computerized process, is required to complete proper Statement Workload and Transaction Time Analysis.

When you go tool shopping:

- Make sure the tool computes aggregated Statement Workload Analysis as described above

- Make sure the tool does NOT attempt to save EVERY statement in its performance repository - this will flood your network and consume terabytes of disk storage. Instead, look for a tool that stores small, compressed aggregated summaries in its performance repository.

- Make sure the tool can aggregate statement costs between any two timestamps - thus allowing you to review performance for the prior three hours, the prior night, Monday through Friday of the week before, the prior month or months, or any time window that is of interest to your management

- Make sure the tool includes STATIC statements in its Statement Workload Analysis - STATIC SQL is used by Stored Procedures, many applications, and is growing in popularity thanks to IBM Data Studio

- Make sure the tool doesn't rely on db2pd for input - the statement information from db2pd does not contain cost attributes

- Make sure the tool doesn't create Event Monitors that write their information to DB2 tables in the monitored database - this can cause up to a 400% explosion of activity in your database that is already suffering from performance issues (that's why you are trying to monitor it, right? )

The Shameless Marketing Moment

|

Check, Check, Check, Check, Check, and Check! DBI's Brother-Panther™ provides industry leading, best of breed, statement workload analysis capabilities. You will quickly see the statements that have high aggregate costs, and you can pass individual statements or entire costly workloads to the IBM Design Advisor for rapid solutions. After you have implemented a physical design change with the intent of improving performance, Brother-Panther's Performance Trend Charts with integrated, correlated, and plotted change event histories make it easy to verify your performance improvement success. No tool on the market makes the full performance management life cycle of identify, isolate, cure, and verify easier, nor can any other tool deliver better performance results.

|

Just for Fun

Have you visited JibJab® lately? This is funny stuff. This site even makes it possible to cast your own head, or the head of a friend or family member, into an animated skit.

With kindest regards,

Scott

Scott Hayes

President & CEO, DBI

IBM GOLD Consultant

DBI is an IBM Advanced, Industry Optimized, Business Partner

DBI is an Oracle Technology Network Partner

DBI provides products and services to over 2,000 customers worldwide

www.DBISoftware.com

Your Performance IS Our Business

Post from : http://www.dbisoftware.com/blog/db2_performance.php

Printed from : http://www.dbisoftware.com/blog/db2_performance.php?id=123